“I've never seen any technology advance faster than this. Artificial intelligence compute coming online appears to be increasing by a factor of ten every six months. Obviously, that can’t continue at such a high rate forever but…I've never seen anything like it…the chip rush is bigger than any gold rush that has ever existed.”

Elon Musk, Bosch World Conference, March 2024

The semiconductor industry has periodically been reshaped by tectonic shifts in the broader computing landscape. While the foundational geometric scaling of silicon technology has followed a secular trend established by Moore’s Law, every successive era of computing over the last six decades has fundamentally transformed the semiconductor industry that drove it forward. We are in the early days of the next inflection.

The PC era that began in the 1980s established the CPU as the enabling silicon platform, which in turn shaped the evolution of semiconductor technology and established the pre-eminence of the Integrated Device Manufacturing (IDM) business model over the following decade. The mobile era that began in the mid-2000s established the mobile SoC as the enabling silicon platform and established the pre-eminence of the foundry-fabless ecosystem over the decade that followed. The AI era is in its early days and has already begun to alter the contours of the semiconductor landscape.

Three major shifts are re-defining the semiconductor landscape today. Looking back a decade later, these are likely to be the foundational transformations in the semiconductor industry catalyzed by the emergence of AI computing.

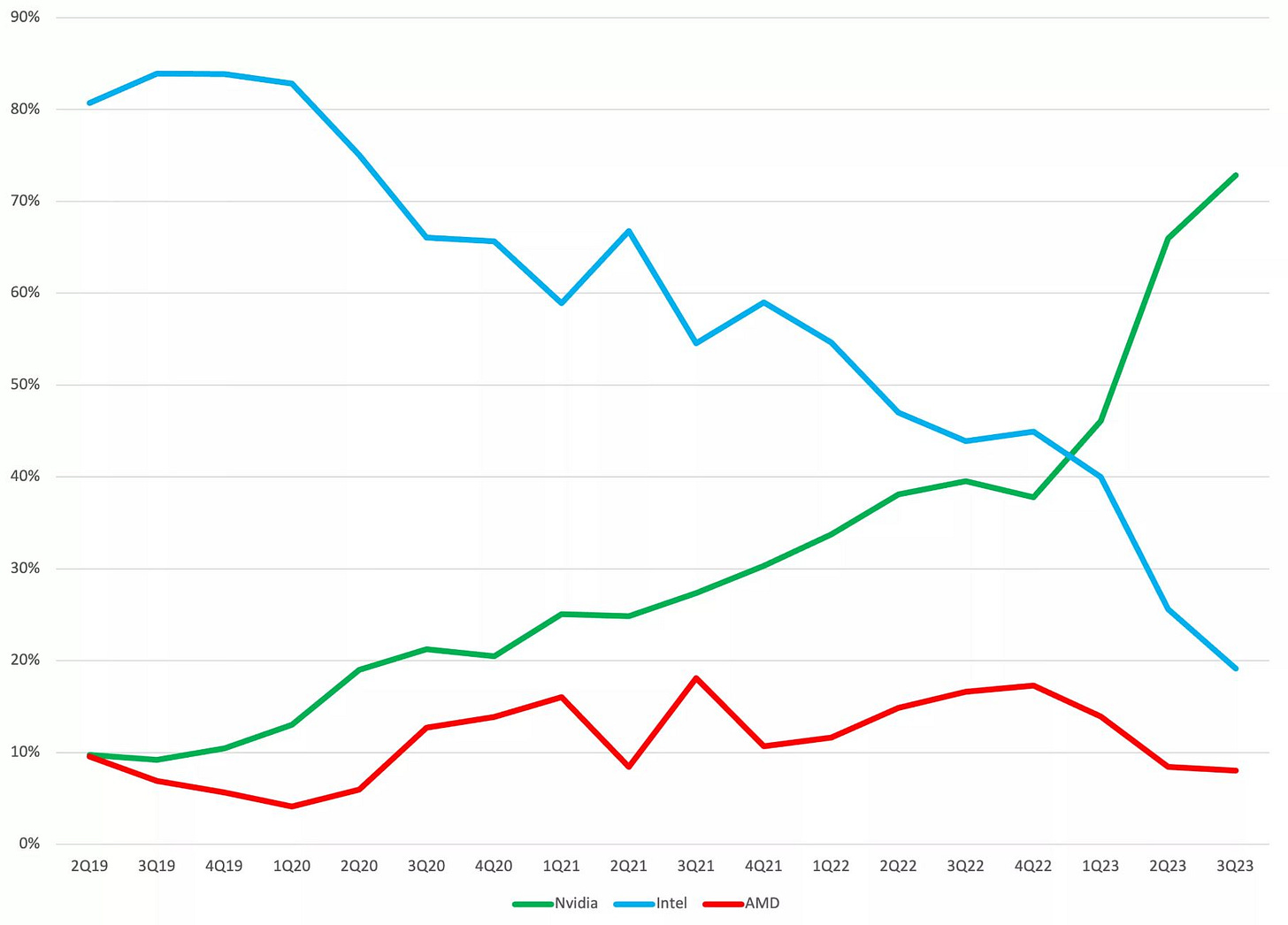

One: From CPUs to GPUs

“…because of this new machine learning paradigm, the kinds of computations we want to run are quite different than traditional handwritten twisty C++ code that a lot of basic CPUs were designed to run effectively, and so we want different kinds of hardware in order to run these computations more efficiently. And we can actually in some sense focus on a narrower set of things we want computers to do and do them extremely well and extremely efficiently, and then be able to have you know that increasing scale actually be even more possible.”

Jeff Dean, Chief Scientist, Google DeepMind and Google Research, February 2024 (Link)

In so much as the Central Processing Unit (CPU) has been “central” to high performance computing in datacenters, that central role is now increasingly being fulfilled by the Graphics Processing Unit (GPU). A more appropriate technical descriptor of this shift would be “from Scalar to Vector” or “from Scalar to Tensor”, reflecting the transition in the underlying computer architecture.

The “GPU” moniker is now an amusing misnomer since GPUs that enable AI computing are no longer dedicated graphics processing units at all. They are simply general-purpose, programmable shader-based vector computers that happen to be highly power-efficient for massively parallelized machine learning workloads that tend to be dominated by matrix multiplication and other linear algebra primitives. While GPUs are the dominant platform for machine learning today, other custom-designed vector or tensor-based architectures can be equally if not more efficient for reduced precision linear algebra-based workloads.

“…it seems like the world is going to be very compute constrained for a while and I think almost all of the datacenters around the world will over time be AI instead of conventional CPUs…if you ask what percentage of the energy (in datacenters) is being used for neural nets, it's going to over time be probably 80-90%...”

Elon Musk, January 2024

In the PC era, the CPU quickly became the primary volume driver and largest revenue generator for the semiconductor industry and in turn, became the foundational platform that drove innovation in silicon technology. As the primary beneficiary of this shift to CPU-centric computing, Intel was able to set the pace and direction of Moore’s Law during the PC era. Transistor technology was defined and optimized to be “CPU-first”, as evidenced by Intel’s groundbreaking transistor innovations through the 1990s and 2000s. Foundry suppliers would later adapt and waterfall these innovations to suit the needs of other applications.

In the mobile era, the Application Processor Unit (APU), which integrated a CPU and a GPU with a host of other functionality in a single System on a Chip (SoC) drove 10X higher unit volumes than the standalone CPU and became the primary volume driver for the semiconductor foundry ecosystem. Over the last decade, the dominance of the mobile SoC platform ensured that the APU set the pace and direction of Moore’s Law – Apple’s iPhone roadmap has an outsized influence on TSMCs process technology cadence and roadmap. Transistor technology is now optimized to be “mobile SoC-first”, to be later adapted for other applications.

An interesting question then is how this dynamic may shift in the AI era – the datacenter GPU has already established itself as the primary enabler of generative AI model training workloads and has also driven exponential revenue growth for product designers (e.g. NVIDIA) and silicon foundry suppliers (e.g. TSMC). GPUs will over time drive increasing wafer volumes for the foundry suppliers. In addition, since datacenter GPUs are a system solution rather than a discrete chip solution, they already drive large (if not the largest) unit volumes for system integration and advanced packaging technologies (e.g. CoWoS at TSMC). NVIDIA is now TSMCs second largest customer by revenue (Link) and the GPUs it builds are bound to play an outsized role in setting the foundry process and packaging technology cadence and roadmap.

“There’s about a trillion dollars’ worth of installed base of data centers. Over the course of the next four or five years, we’ll have two trillion dollars’ worth of data centers that will be powering software around the world.”

Jensen Huang, January 2024

Even if one assumes a $500B computing infrastructure buildout (half of Jensen’s $1T estimate) over the rest of the decade, it still implies massive demand growth for datacenter GPUs in the coming years. Datacenter GPUs used for AI training drive large revenue, but significantly less wafer volume compared to the CPU. Over time, if GPUs become the enabling platform for AI inference workloads too, then they will drive far more volume, comparable to, if not eclipsing that of the mobile SoC. If this trend holds, then the GPU could become the new “central” processing unit.

“Inference is incredibly hard…the goal of somebody who's doing inference is to engage a lot more users, to apply the software to a large installed base…And so, the problem with inference is that it is actually an installed base problem and that takes enormous patience, years and years of success and dedication to architectural compatibility.”

Jensen Huang, March 2024 on why he believes GPU solutions will win in AI inference.

Two: From Chips to Systems

A defining trait of the PC platform was its ability to become easily modularized. This enabled the PC industry to become horizontal and allowed a wave of independent original equipment manufacturers (OEMs) and original device manufacturers (ODMs) to thrive. Michael Dell famously started assembling PCs from his dorm room and grew his startup into a multi-billion-dollar enterprise. ASUS, Compaq, HP and many other PC vendors were able to build highly successful businesses by assembling the outputs of discrete component manufacturers. And because the CPU was the primary unit of compute for the PC, Intel became the largest semiconductor beneficiary of this modularization. Even though Intel only supplied one component (the CPU), it enjoyed an outsized revenue share in the PC market.

In the mobile era, some single chip (or single component) suppliers did become large players (e.g. Qualcomm’s modem chip), but they were unable to reach the dominance that the CPU enabled for Intel in the PC era because the largest revenue share in the mobile era went to companies building integrated systems rather than single chips. Apple is the most extreme example of this value aggregation. Android phone manufacturers (e.g. HTC, Samsung, Google) were successful because they also achieved some level of system integration, but they were not able to command the profitability of a fully integrated provider like Apple. More importantly, full integration enabled Apple to arguably build a better product and hone high-skill manufacturing processes and technologies that became a sustaining competitive advantage for them and their outsourced assembly partner (Foxconn).

An equally significant, but relatively unnoticed shift is underway in how modern AI computing systems are built and who is building them. NVIDIA is no longer just a chip (or even a graphics card) supplier - it builds the entire computer – the DGX box integrates 35,000+ components and weighs 70 lbs. Designing and building such a box is a high-skill and lengthy manufacturing endeavor in itself, much akin to how Apple designs and builds the iPhone. Moreover, less than a dozen of those 35,000+ components are advanced node silicon chips, and these include not just compute (CPU and GPU) chips, but custom designed high-speed network processor chips as well. NVIDIA has become far more than a merchant chip company or even a semiconductor company – it is a systems (and software) company, designing complex computers and getting them built via a vast supplier network along a complex, global supply chain – an incredibly wide moat for any traditional discrete chip company to cross.

NVIDIA also offers an authorized reference design platform (HGX100) which third party OEMs can build upon to create custom variants. Pure-play third party OEMs can assemble such systems too, just as they assembled PCs and server systems earlier. However, given the complexity of these new systems and the tight integration required across every layer of abstraction up to and including software, it remains to be seen if pure-play third party integrators will be able to achieve best-in-class performance without any reference designs from the primary vendor.

In essence, the modular unit of compute is shifting from a chip (e.g. a discrete CPU) to a tightly integrated system in a box (including CPUs, GPUs, networking, DRAM, I/O peripherals and much more). If the unit of compute for the datacenter is shifting from a chip to a box, it is highly integrated systems companies that will get an outsized share of the total market revenue. It is interesting to note that AMD as a discrete silicon provider (with the MI300 AI accelerator) gets just a fraction of the share of wallet that NVIDIA enjoys by selling the entire DGX computer (Link). Similarly, Intel Xeon CPUs are a part of each DGX box that NVIDIA sells – yet Intel gets just a fraction of the premium that NVIDIA earns by selling the integrated box. As the unit of compute shifts from the chip to the system, the lion’s share of semiconductor revenue in the AI era will go to systems companies and not to discrete chip companies.

Three: From Horizontal to Vertical

“Vertically integrated firms will often dominate in the most demanding tiers of markets that have grown to substantial size, while a horizontally stratified, or disintegrated, industry structure will often be the dominant business model in the tiers of the market that are less demanding of functionality.”

Clayton M. Christensen (Link)

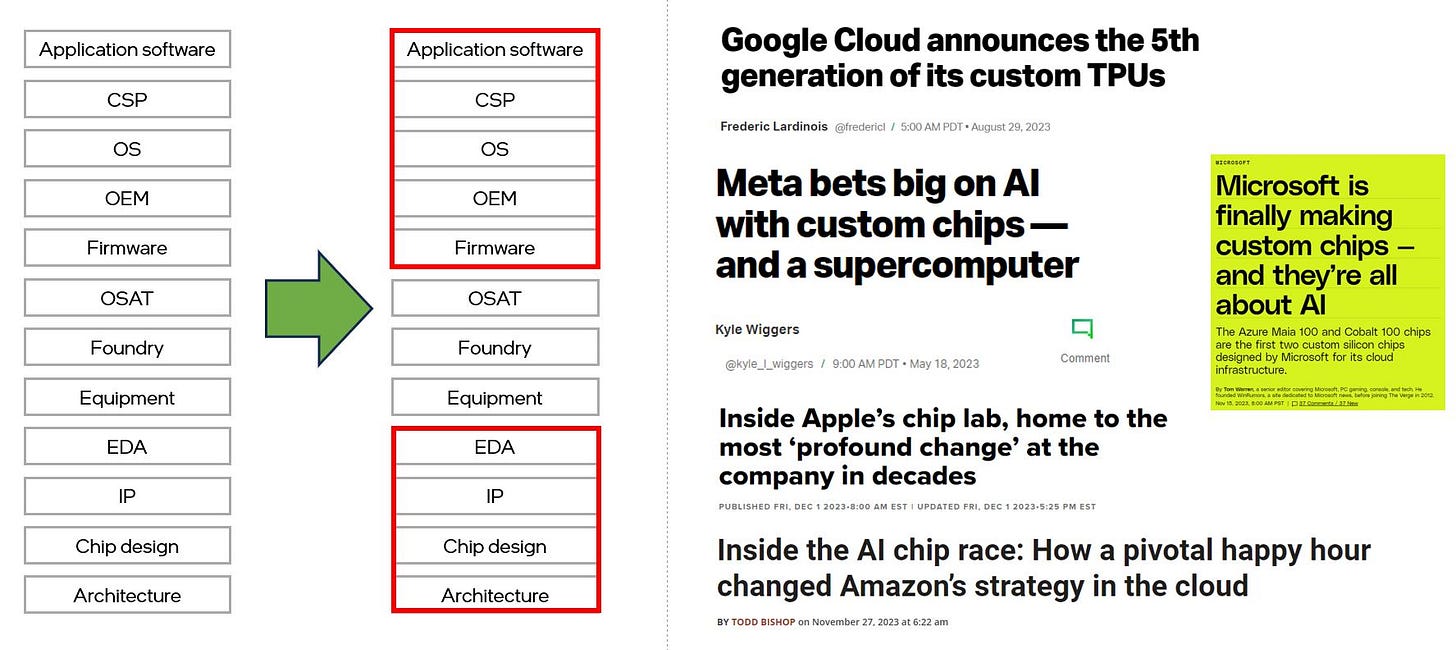

In the 1990s, Andy Grove astutely observed the transformation of computing from a vertically integrated business to independent and horizontal businesses. This transformation provided the catalyst for integrated device manufacturers like Intel to thrive in the PC era. Intel famously established the predominant computer architecture (x86) and by combining it with the ability to manufacture the most advanced chips at scale was able to become the largest semiconductor company in the world. The mobile era broke the dominance of the integrated manufacturing and design model and paved the way for the foundry-fabless business model to thrive. The AI era is facilitating the rise of computing as a pseudo-vertically integrated business. There are several strategic reasons that have driven this trend for over a decade, but the rapid rise of generative AI in just the last 2 years is accelerating this transformation and will have a significant impact on the traditional semiconductor industry landscape.

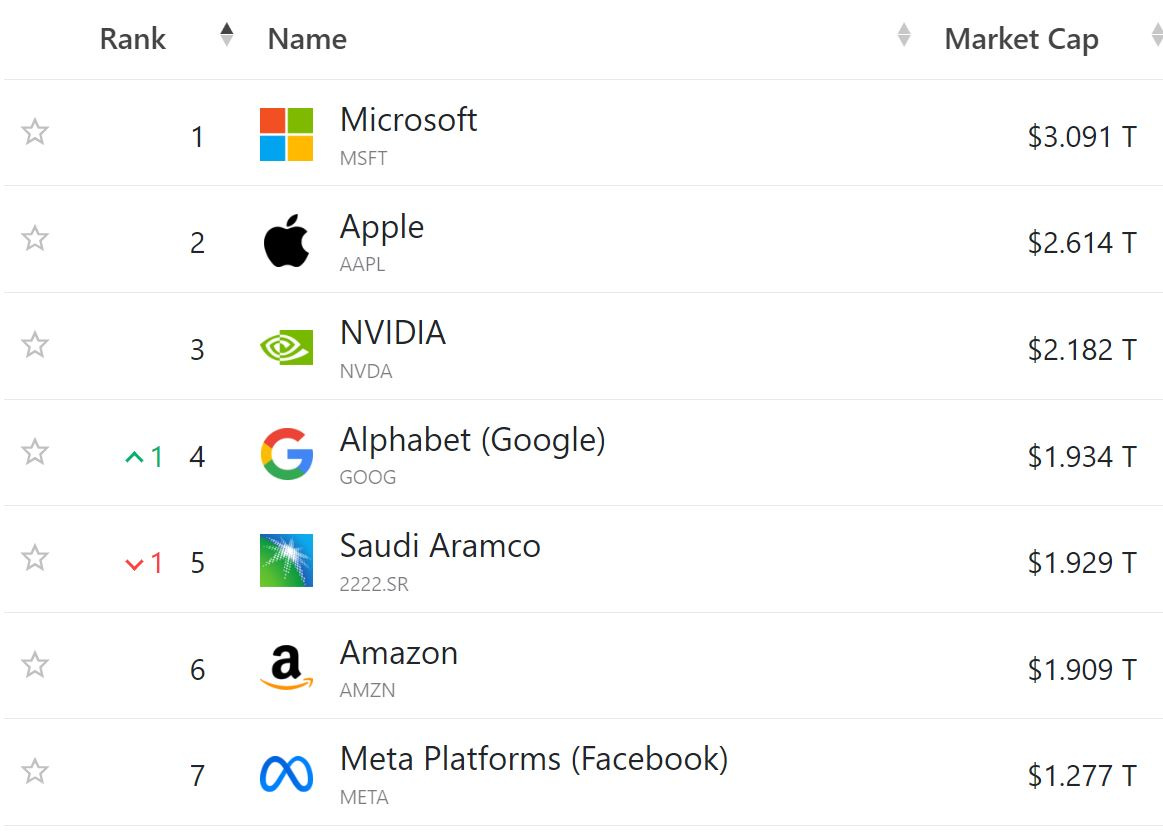

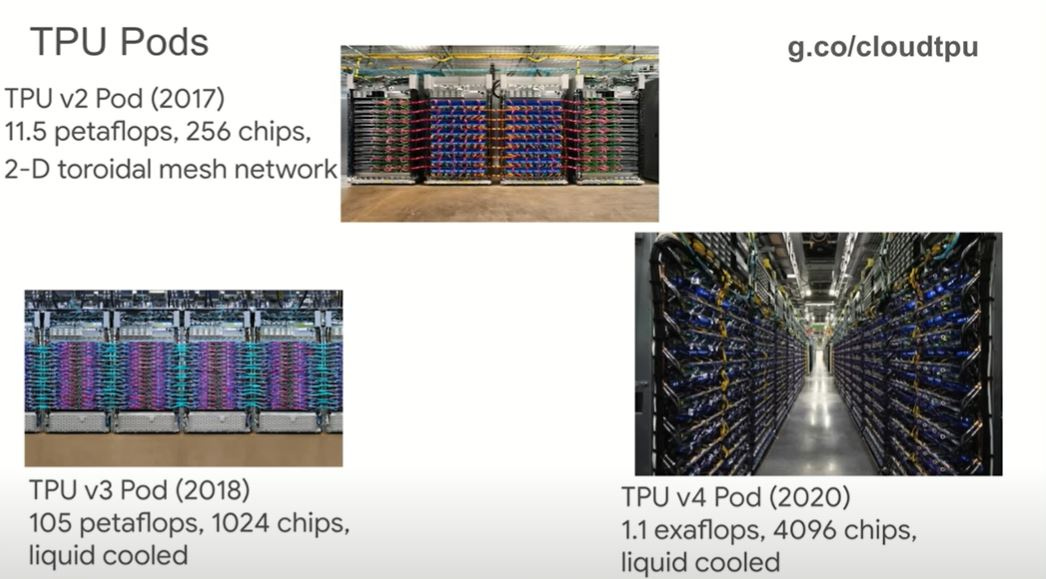

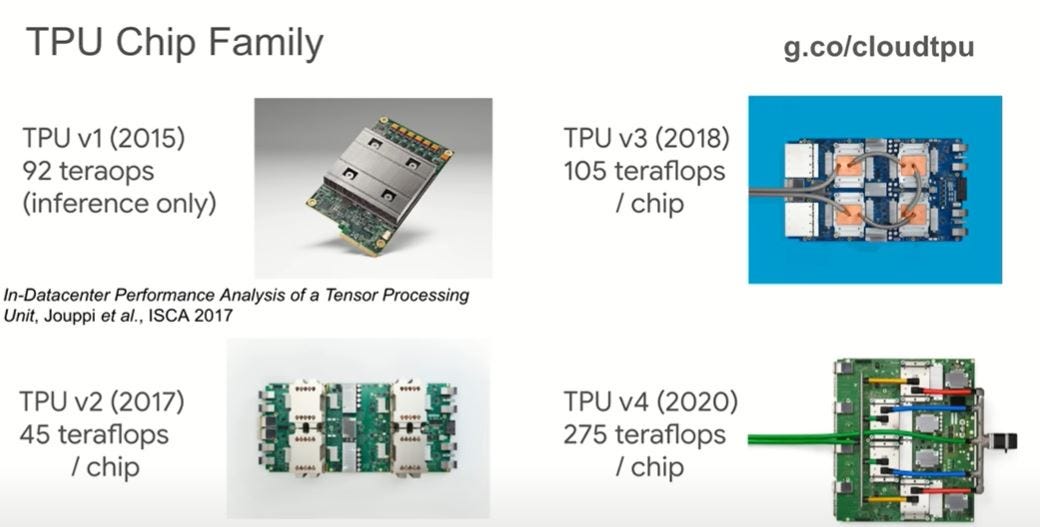

AI computing, especially datacenter training represents the most demanding compute workload for semiconductors today and has now reached a scale where vertical integration down to silicon is not only economically viable, but strategically vital, exactly as observed by Clayton Christensen. Seven of the world’s ten largest companies are now designing their own custom silicon (ranging from small ASICs to state-of-the-art general-purpose CPUs, networking processors and many other chips). The large cloud providers and technology companies started by building discrete silicon chips – but they quickly evolved to designing entire computing systems themselves – Google’s TPU Pod or Tesla’s Dojo are examples of this trend. It is interesting to note that the modular and horizontal semiconductor ecosystem that burgeoned and matured during the prior two eras of computing is now enabling today’s largest companies to easily re-integrate vertically down the computing stack – with silicon wafer fabrication, packaging and assembly being the only functions that still need to be outsourced. Companies like Amazon started out by designing networking processors and within a few years began designing domain specific accelerators and general-purpose CPUs as well.

I wrote about custom silicon development at AWS and Apple earlier:

Microsoft, Amazon, Google, Tesla, Meta and others are designing not just their own chips, but entire high-performance computing systems, much like the NVIDIA DGX box described earlier.

OpenAI which doesn’t even operate its own datacenters yet is assembling its own hardware team too (Link). These companies will leverage the existing semiconductor manufacturing and assembly ecosystem to varying degrees to build their own compute systems designed and optimized for their most prevalent and strategic workloads. As compute capacity becomes one of the most vital technology assets in the AI era, the strategic incentives for vertical integration will only grow stronger over time and will likely change the balance of power in the pure-play semiconductor design landscape.

Summary

Every era of computing has initially been supported a plurality of competing hardware and software solutions, but eventually the majority share of market revenue has disproportionately gone to just one or two dominant entities: IBM (mainframes), DEC (minicomputers), Intel + Microsoft (PC), Apple + Google (Mobile). Companies that successfully achieve the transition from discrete chips to integrated systems (including software) and from scalar-based architectures to tensor-based architectures will be best positioned to win in the AI era of computing. Interestingly, the incumbent merchant chip and system providers will be competing fiercely not only amongst themselves and a new crop of silicon, systems and software start-ups, but more so against their own customers who are integrating vertically down to silicon and building custom silicon chips and systems to displace their suppliers.

The chip wars for supremacy in the AI era have begun – and this time, winning the battle will require more than just chips.

The views expressed herein are the authors’ own.

Great article Pushkar Ranade.

Feels like almost a full circle - IDM to fabless, now back to ISM (integrated system manufacturer) ?

This is an exceptional business history of computing architecture supported by incisive technical analysis that does not bore. Thank you