“During the early stages of an industry, when the functionality and reliability of a product isn’t yet adequate to meet customer needs, a proprietary solution is almost always the right solution — because it allows you to knit all the pieces together in an optimized way. But once the technology matures and becomes good enough, industry standards emerge. That leads to the standardization of interfaces, which lets companies specialize on pieces of the overall system, and the product becomes modular. At that point, the competitive advantage of the early leader dissipates, and the ability to make money migrates to whoever controls the performance-defining subsystem.”

Clayton M. Christensen (2006)

Amazon Web Services (AWS) was founded ca 2006. Just a few years later, in 2012, this software services provider veered away from its core competency to create a small silicon chip design team. Over the following decade, this silicon team grew to build five separate product lines of custom silicon that now support the massive AWS cloud computing infrastructure. One of these chips, the AWS Nitro is currently in its sixth generation and is deployed with every single new server installed in the AWS fleet, with over 20 million installed to date.

How did a software company with no prior semiconductor experience design and deploy advanced process node silicon at datacenter scale within just a decade? Why did they choose to do so? This paper outlines the history and the motivation for custom silicon development at AWS and its impact on other hyperscalers and incumbent merchant silicon providers alike. In addition to denoting a strategic inflection point along the history of computing, silicon innovation at Amazon also serves as a case study to illustrate the management principles of founder Jeff Bezos.

The Bezos Way

James Hamilton, Senior Vice President and Distinguished Engineer at Amazon and one of the original architects of the custom silicon program at AWS outlined the history of silicon innovation at Amazon in a recent keynote and an op-ed in the Wall Street Journal. His key messages reflect the strategic decision-making process at Amazon.

Start with the customer problem and work backward to design a solution

“Many organizations define their core competency by what they do. But when you define it by what your customers need, it opens the door to new opportunities. Although this approach comes with greater risk, it also comes with a greater reward.”

This approach of working backward from a customer problem all the way to understanding the root cause was instrumental in the creation of Amazon’s original bookselling business itself and later helped create the vast Amazon retail empire and other successful ventures including Alexa and AWS.

In 2012, this approach also led AWS engineers to identify the need to off-load hypervisor and authorization functions that were traditionally executed on the server CPU itself. By off-loading these functions to dedicated hardware and software, they projected significant improvement in datacenter compute efficiency and cost by enabling all available precious server compute resources to be used as customer instances. This discovery was the genesis of the Nitro System – a series of add-on infrastructure silicon cards based on a custom designed chip called Nitro. In addition to freeing up more compute cores for customer workloads, the Nitro system also enabled a far greater degree of security and reliability for AWS cloud by serving as a gateway for all traffic into and out of AWS infrastructure and by eliminating the noisy neighbor problem inherent to traditional on-chip hypervisors. The latest generation of Nitro is made on 7nm TSMC silicon.

The power of a written narrative

“When we have a new idea at Amazon, we share it with leadership in a six-page narrative format as opposed to a presentation. This approach has many benefits: It forces the author to clarify their thinking, focuses leadership on the idea instead of someone’s presentation skills, creates context for a focused discussion, and provides a timeless reference.”

Amazon’s decision to start a custom silicon team in 2012 was rooted in two central observations that James Hamilton described in a long form written narrative titled “AWS Custom Hardware”. Jeff Bezos (then Amazon CEO) and Andy Jassy (then AWS CEO) reviewed this document which articulated the central theses for why it was vital for AWS to build custom server chips and to develop silicon expertise right down to working with foundries.

Thesis #1: Volume and scale will drive innovation on Arm

Any idea or technology that gains critical mass and commands scale and volume is likely to become the de-facto platform that drives the next wave of innovation. By 2012, about 5 years into the iPhone era, the explosive momentum of Arm in mobile computing was evident and James argued that Arm and the Arm ISA would eventually drive the next wave of innovation and move up the stack to become a big part of server-side computing. James has been a prolific and well-known blogger for years and he even articulated this vision publicly as early as 2009 (Link). AWS was thus one of the earliest and strongest proponents of Arm servers and had been encouraging Arm to innovate in the server space. The belief was that Arm mobile and IOT volumes would eventually feed the innovation necessary to drive server volumes as well.

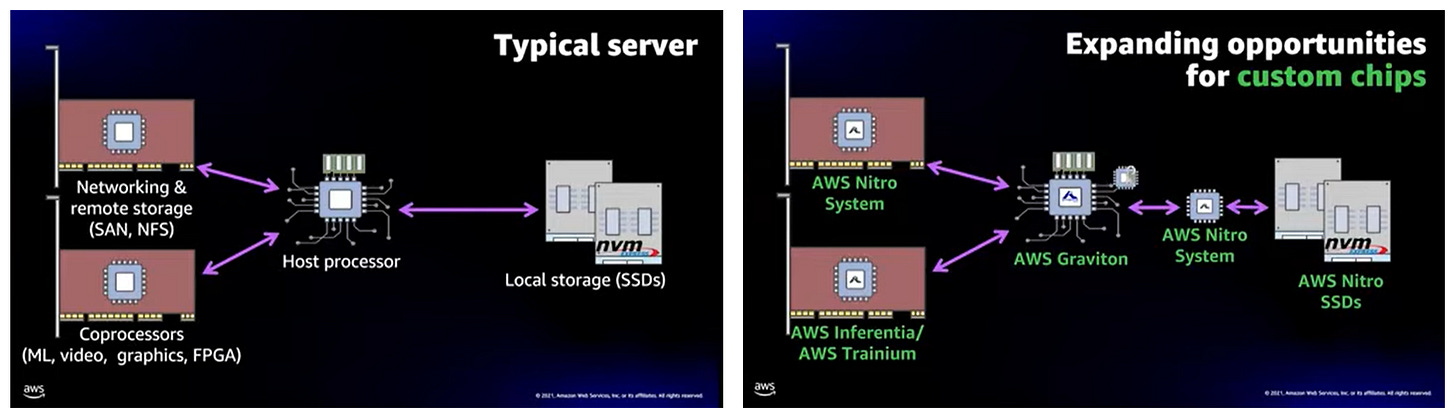

Thesis #2: Server integration will progress from board- to chip- to package-level

As early as 2012, it was clear that datacenter server functionality and innovation was continuing to become increasingly consolidated at the board level. The AWS team posited that over time, this integration would only continue and eventually consolidate within the silicon package itself. This observation also borrowed heavily from the evolution of mobile computing where more and more silicon functionality in a phone became consolidated into the central applications processor (APU) over time. James argued then that if building custom servers at scale was central to the AWS mission and value proposition – and if server innovation was consolidating onto a chip/package – then it was vital that AWS develop the expertise to design their own chips and packages.

Both these arguments resonated with Jeff Bezos and Andy Jassy, who approved the strategy and authorized the project.

“If all of the innovation on a server is being pulled onto the chip, and if we don’t build the chip, then we don’t innovate. And that is just not a great place to be. And that’s just not how we work.”

One-way Door or Two-way Door

“We use the terms one-way door and two-way door to describe the risk of a decision at Amazon. Two-way door decisions have a low cost of failure and are easy to undo, while one-way door decisions have a high cost of failure and are hard to undo. We make two-way door decisions quickly, knowing that speed of execution is key, but we make one-way door decisions slowly and far more deliberately.”

Given the large and sustained capital investment needed, the decision to invest in custom silicon was deemed a one-way door decision. AWS management deliberately made the commitment with a long-term view and authorized the team to build and seek the expertise needed for long-term success.

Flywheels to accelerate business growth

“By improving the customer experience and lowering costs, more customers use our solutions, which allows us to invest in further improvements and cost reductions, and the cycle continues.”

Another well-known Jeff Bezos strategy is the virtuous flywheel – the idea that any initiative that attracts new customers to the Amazon platform acts as an accelerant to the growth of the entire platform. The launch of the Nitro improved the AWS experience for early customers. This led to the start of a business flywheel with early small wins building on each other to create momentum.

AWS cited the ability to design chips tuned to their workloads and infrastructure (specialization and innovation), significantly faster turnaround time and streamlined operations as ongoing benefits from their custom silicon program.

“If you can have a server within a server, it can do so much more – we can add so much value – but it cannot make the server more expensive in a material way, or it just won’t work. And if you think about that – the only way we can deliver that, the only way we can make that happen, is to vertically integrate. It means that server, which is being embedded in every one of our servers, and sometimes embedded many times, has to be absolutely as low cost as possible, which means that we are going to have to design the hardware, all the way down to the semiconductors – it means that we are going to have to get the part fabbed. The whole thing has to be done by AWS, because that is the only way we can deliver this vision.”

James Hamilton, AWS Senior Vice President and Distinguished Engineer

Working with Annapurna

In early 2012, AWS worked with Cavium (now part of Marvell) to build the first version of the Nitro chip. A big constraint the AWS team had placed on Cavium was to build the chip (which was deemed to be a server within a server) without any substantial increase in cost. The initial solution from Cavium was 10X more expensive than the AWS cost target, but they decided to take a chance and build a million units as a pilot. Once the effectiveness of the chip was established, AWS began to plan for the second-generation Nitro. By this time, the internal memo on AWS Custom Hardware had already been presented to Jeff Bezos and the strategy to start a custom silicon program was already in place. This is when James and the AWS management came across the team from Annapurna Labs, a small start-up in Israel. The two teams started working together on the production of next generation processors. The nimbleness of the Annapurna team accelerated the AWS custom silicon effort. The partnership went extremely well and by 2015, AWS acquired Annapurna Labs (Link), making the Annapurna team the nucleus of subsequent custom silicon development within AWS.

Since then, AWS has continued to expand their portfolio with six generations of Nitro System, two generations of Nitro SSD (solid state drives), three generations of Graviton (general purpose Arm-based CPU), two generations of Trainium (machine learning training ASIC) and Inferentia (machine learning inference ASIC).

Within just one decade, AWS built multiple generations of custom silicon variants for every major chip in a server platform (infrastructure/networking, accelerators/co-processors and general-purpose host processors)

Hyperscalers go Vertical

It is important to place the AWS custom silicon strategy in the broader context of the evolution of cloud computing workloads and the cloud computing services business. AWS was an early mover among the hyperscalers in the development of custom silicon. It would be several years before Google and Microsoft would pursue similar efforts. Apple, although not a public cloud service provider (CSP), owns and maintains a large computing infrastructure for its iCloud-based services. Facebook, another top cloud customer is also developing its own silicon (Link). Three of the largest Chinese cloud computing customers, Baidu, Alibaba and Tencent are each developing their own custom silicon as well (Link). There are several important reasons for this growing trend which will be explored in a future article.

The views expressed herein are my own.

I wonder if Thesis #1 ought to be generalized to "picking the right horse to ride" as it needs to consider and reconsider periodically the current market dynamics. Would James Hamilton still pick ARM as his horse today in 2022? According to https://wccftech.com/x86-arm-rival-risc-v-architecture-ships-10-billion-cores/, RISC-V took only 12 years to reach 10 billion cores shipped as opposed to ARM which took 17 years. It is now projected to reach 80 billion cores in just 3 years! And it comes without any licensing/royalty encumbrances of ARM.

Good stuff, hope you write more often!