Exponentially increasing silicon transistor density has been the foundational ingredient of progress in computing for nearly six decades. This increase in transistor density on a regular cadence as first observed and predicted by Intel co-founder Gordon Moore was enabled by fundamental and relentless innovation in materials engineering, semiconductor process integration and solid-state devices. Yet, holistic progress in computing required innovation across many more disparate disciplines. All of this multi-disciplinary innovation needed to be synchronized in order to realize the true promise of Moore’s Law. For coordinated innovation of such large a scope and at such large a scale to continue unabated for six decades is a truly phenomenal human achievement.

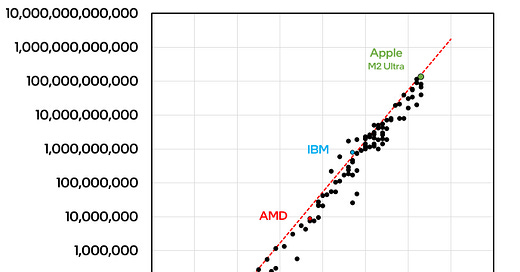

The microprocessor has embodied progress in computing for over five decades. Remarkably, transistor counts in a microprocessor have continued to double every 2 years, regardless of the underlying microarchitecture, chip designer or silicon manufacturer. By 2030, we are likely to witness microprocessor products with over 1 trillion transistors in a package!

Understanding and appreciating what actually enabled the Moore’s Law exponential to continue for the last six decades may help us anticipate what is next in computing. This multi-part essay is an attempt to articulate the inner mechanics that propelled the evolution of computing and understand how exponential growth was realized and sustained at-scale for so long.

Managing Complexity: From Atoms to Bits

Gordon Moore’s seminal observation specifically referred to increasing transistor counts on a wafer. Yet, realizing the promise of Moore’s Law required innovation not just in transistor process technology, but across every domain of computing including software.

As transistor counts increased over time, every individual building block from silicon processing to circuit design to computer microarchitecture all the way up to the software witnessed an exponential rise in complexity. Continued progress in computing was predicated upon the effective management of this complexity. This was achieved by breaking down large, seemingly intractable domains of computing into smaller more manageable ones. In fact, one of the primary enablers of Moore’s Law was the refactoring of computing from a single monolithic enterprise to a stack of independent enterprises – in well-defined abstraction layers from atoms to bits – with clear hand-offs and dependencies between layers that drove innovation and progress within each layer.

Over six decades, progressively refactoring and raising the level of abstraction has not only enabled engineers to manage exponentially growing complexity but it has also given structure to the business and ecosystem of computing and created entirely new market segments from foundries and equipment manufacturers working at atomic scale to cloud service providers and software developers working at datacenter scale.

The evolution of computing over six decades from a predominantly vertically integrated business to a well-defined stack of businesses that operate independently yet rely on each other to deliver progress in computing as a whole. With every passing decade, individual layers in the computing stack continued to establish themselves as standalone businesses and market segments.

For example, in the early years, it was the norm for a single semiconductor company to be vertically integrated and own nearly every aspect of its computing products – not just the design of chips and manufacturing of wafers, but also intermediate steps like fab and test equipment, software to aid circuit design, packaging and board-level assembly and testing. Over time, the investments required to remain competitive in each of these individual domains far exceeded the returns on those investments. Few single companies operated at the scale necessary to make these investments cost-effective. This led to the creation of standalone assembly and test providers (1960s), standalone semiconductor equipment manufacturers (1970s) and standalone Electronic Design Automation (EDA) vendors (early 1980s). By the late 1980s, pure-play semiconductor foundries emerged, allowing the creation of standalone “fabless” semiconductor design houses. The 1990s saw the emergence of standalone, reusable Intellectual Property (IP) providers that enabled designers to further abstract away complexity and speed-up chip design. Even the Instruction Set Architecture (ISA), the foundation of the hardware-software contract became available as a licensable IP, further catalyzing the growth of the computing ecosystem by enabling many more companies to easily design computers. Progressively raising the level of abstraction in software enabled the rise of scores of independent application software developers. A multitude of advances in hi-tech design, manufacturing and assembly enabled original-equipment-manufacturers (OEMs) and original-device-manufacturers (ODMs) to create products in a variety of form factors from mainframes to smartphones that made computing affordable, accessible and usable by the vast majority of humanity.

The transformation of computing from a monolithic enterprise into a stack of distinct yet interdependent layers was in some ways an inevitable business outcome driven by the growing scale and investment profile needed within each layer. However, this refactoring was also vital to spur innovation, efficiency and creativity within each layer. It is arguable that this stratification was an essential element in realizing the full promise of Moore’s Law and managing the complexity spiral unleashed by exponentially growing transistor counts.

Abstract and Refactor

“The essence of abstraction is preserving information that is relevant in a given context, and forgetting information that is irrelevant in that context.”

John V. Guttag, Introduction to Computation and Programming Using Python

Managing complexity via refactoring and abstraction has been the hallmark of nearly every major computing domain. In the absence of such abstraction, it would have been impossible for the various elements of computing to keep pace with the increase in transistor density driven by Moore’s Law. Breaking down large problems into more tractable ones also enabled a vigorous competition of ideas within each layer.

A classic example of the use of abstraction is in networking, where these layers provided a modular and hierarchical approach to the larger idea of networking, allowing different components and protocols to work together to enable reliable and efficient communication across networks. Such a conceptual framework facilitated the understanding of network protocols and communications and allowed the progress of an entire industry via numerous independent businesses.

Nearly every domain of computing evolved via abstraction and refactoring into individual layers, enabling the effective management of growing complexity within every single layer.

To manage the growing complexity of chip design, engineers relied on computer-aided-design (CAD) tools to help automate chip design. CAD evolved to become an industry in its own right and came to be known as Electronic Design Automation (EDA). With every 10X increase in transistor count, there was at least one significant EDA innovation that progressively raised the level of abstraction and made it easier for designers to manage large and complex chip designs.

A major innovation in EDA emerged with roughly every 10X increase in transistor count. Every successive innovation progressively raised the level of design abstraction.

Starting with hand-drawn logic designs and Karnaugh maps, these innovations led to progressively sophisticated software-defined tools such as HDL (Hardware Description Language) to enable designers to abstract away complexity. In recent years, the complexity of chip design has grown to require enormous amounts of compute and storage capability and a vast network infrastructure. This datacenter-scale infrastructure requirement has made it necessary for EDA tools to operate in large clouds owned by cloud service providers (CSPs) or hyperscalers. Cloud-based EDA and access to virtually unlimited compute and storage is now paving the way to further raise the design abstraction using AI-enabled chip design. In just a few years, it may be possible to have complex circuit design tasks accomplished with code which will be written not by humans, but by software following human instructions in conversational English as discussed here:

Software 2.0 and the Future of Chip Design

“We took a step back and we said, “What is the implication of this?” Not just for computer vision, but ultimately for how software is done altogether. Recognizing that for the very first time, software is not going to be written – features weren't going to be engineered or created by humans

What is Next?

By the end of this decade, we will witness yet another 10X increase in transistor count within a microprocessor package – an astounding 900 billion additional transistors from today’s state of the art!

What are the different innovations that will enable this massive improvement? How will chip designers deal with the additional complexity to manage designs with hundreds of billions of transistors? What kinds of computers will architects be able to build with a trillion transistors at their disposal? These questions will likely be addressed in the same way that they have been in every prior 10X transistor increase to-date – by further raising the level of abstraction and refactoring the computing stack.

New, cloud-based, AI-enabled design automation tools will emerge to automate the otherwise untenable task of managing ultra-large transistor count designs. This will lessen the burden on chip designers, freeing them to innovate and invent novel ways to leverage trillion transistors in a package.

A new chiplet abstraction layer will likely be introduced to manage the complexity and raise the level of abstraction at a system level. This will enable system architects to pick and choose from a ready-to-order library of domain specific chiplets and focus more on connecting these chiplets rather than on designing the chiplets themselves. In the 1990s, the third-party, licensable IP revolution democratized computing by creating a rich ecosystem of independent IP companies. Similarly, a rich ecosystem of companies building a variety of domain-specific chiplets with universal connectivity standards is likely to emerge and serve as a vital component of system architecture. Such a chiplet ecosystem will further democratize innovation in computing and make it possible for many more companies to design the advanced computing systems of the future.

Crazy this has such few likes! Awesome piece