Programmable Hardware Comes of Age

A few years ago, Marc Andreessen made an eloquent argument for why software companies were poised to take over the world (Link). Andreessen provided several examples of how relatively new software companies (Amazon, Netflix to name a few) were disrupting their traditional brick-and-mortar counterparts. In just a few years since Andreessen’s observation, Borders and Blockbuster are now bankrupt and many new companies are bolstering the case for software eating the world. The world’s largest taxi company today (Uber) is in fact a software company and doesn't actually own a fleet of cars. The world’s largest accommodation provider is also a software company (AirBnB) yet doesn't own a single room. These are just a few of the incredible disruptions that are happening in our time.

Coming from a semiconductor background, I often think about technology transformations from a silicon (i.e. hardware) perspective. And recent trends have convinced me that the semiconductor landscape is poised for a transformation of its own (you can read some of my earlier posts on this topic here). Can the role of software in disrupting e-commerce, digital content, taxis, hotels and scores of other businesses be emulated in the hardware business itself? Eventually, could software take a bite out of traditional silicon chip design too?

Evolution of Computer Architecture

A sweeping visualization of the history of computer architecture is shown below in a chart compiled by Prof. Mark Horowitz and his team at Stanford University.

Over four decades of continuous advancements in silicon technology delivered a steady increase in the transistor count on a chip (Moore’s Law), accompanied by a phenomenal increase in frequency (speed). And during the first three decades of that journey, chip designs mainly employed just a single CPU core. At the turn of the century, as single threaded, serial processing performance began to plateau, the solution was seemingly straightforward — to add a second core and enable parallel processing. Soon, dual-core morphed into multi-core and then many-core processing. Adding more computing cores continued to improve chip performance for over a decade. But now, even that option is running out of steam. Simply adding more cores is now providing only marginal returns.

Decades of technological advances that made silicon chips smaller, faster and cheaper have brought us to a point where the critical dimensions on a silicon chip can now be counted in atoms. And while it is still fundamentally possible to continue geometrical scaling even further, the cost benefits that drove Moore’s Law in the past are now greatly diminished. A new architectural paradigm will thus be required to continue the improvements in chip performance and enable the massive back-end computing infrastructure (datacenters) that will be required to support the Internet of Things.

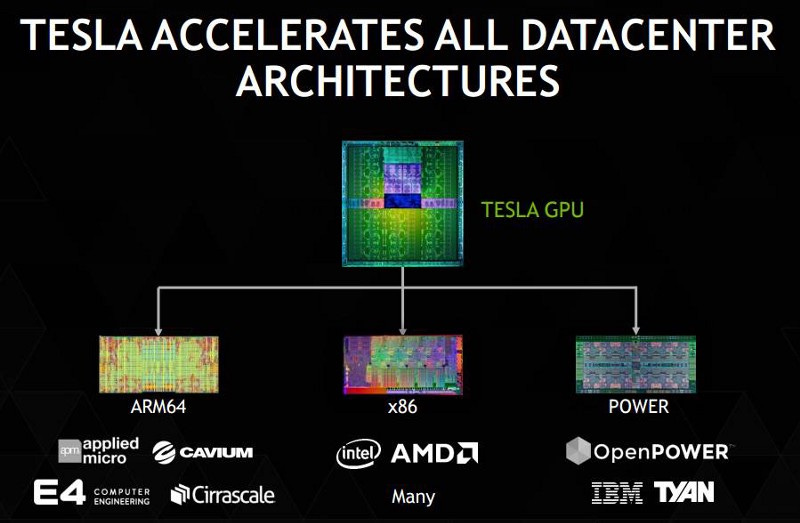

One way to extend the performance gains from a standalone CPU chip is to pair it with a “hardware accelerator” chip. The hardware accelerator chip can be either a field programmable gate array (FPGA) or a General Purpose Graphics Processing Unit (GP-GPU).

As I will explain shortly, each of these options involve software eating at least a portion of silicon chip design! This may not be the same disruption we saw in music, e-commerce, movies or taxi services; at least not just yet. But it is fascinating to note that it is in fact yet another example of software pushing the frontiers of technology and innovation.

Intel acquires Altera

In June this year, Intel announced that it was acquiring FPGA maker Altera. Among the many op-eds trying to explain the rationale behind this deal, the one that resonated the most was the one by Kurt Marko (Link).

“The only way the Altera deal makes sense is if we are on the precipice of a secular shift in system design, not unlike the transition from proprietary RISC CPUs to x86, in which rapid hardware customization is the best path to faster performance. If true, the Altera deal is Intel’s acknowledgement that the benefits of brute force, Moore’s Law scaling have shrunk and that continuing an upward performance trajectory is more dependent on system design than semiconductor physics.”

An FPGA is a blank slate composed simply of a vast array of transistor logic elements and switches capable of being interconnected via a dense metal wiring stack. A software configuration file can then be loaded onto this blank slate to “program” the physical hardware circuitry. The FPGA thus allows software to convert a generic chip into specialized circuitry, custom designed for a specific computation or workload. Moreover, if the nature of the computation changes over time, the chip can be reconfigured with new software designed to make the new workload run more efficiently. In principle, this is a far more desirable approach than having to replace the hardware itself.

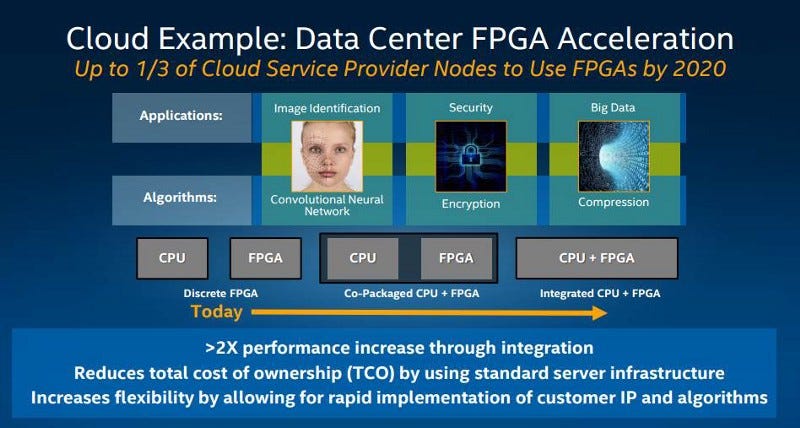

When a programmable FPGA chip is paired up with a general-purpose CPU chip, it allows system architects to divert specific workloads to the FPGA, enabling them to “accelerate” and run more efficiently since the FPGA can be custom configured to run the specific workload unlike the general-purpose CPU. When the workload changes, the accelerator can be reconfigured for the new workload, thus delivering better performance without the need for a hardware or infrastructure upgrade.

For example, Microsoft could use a pre-built configuration library to accelerate specific Bing queries at specific times of the day. Amazon could have unique configuration files to accelerate e-commerce workloads depending on time of day and location. Image recognition could use its own configuration libraries and voice searches could use their own too. Several companies including Intel and Microsoft have written about the performance advantages of programmable hardware accelerators and benchmarking tests show anywhere from 2X to 10X speed-up on workloads like image recognition, machine learning and convolutional neural networks (Read more here).

Programmable hardware is thus a huge enabler of next generation high performance computing and is a key element of Intel’s datacenter roadmap.

General-Purpose GPU

Configuring an FPGA typically involves a hardware description language (HDL) like Verilog or VHDL and as such is not a trivial exercise and perhaps not “high level coding” in the traditional sense (e.g. based on C code). Altera is trying to simplify the process using a platform called OpenCL. OpenCL allows programmers to develop code in the C programming language instead of low level hardware languages. Hence, configuring an FPGA requires engineers with knowledge of chip design, essentially chip designers and not pure code developers. One could imagine however, that over time, reconfiguring an FPGA could be elevated entirely to a high level where a traditional “software engineer” could also reprogram the chip.

An alternate approach is to use a general purpose GPU (GP-GPU) as a hardware accelerator. NVIDIA (NVDA) is a key proponent of this approach using their CUDA architecture. CUDA is an instruction set architecture and hence completely software programmable. And as a result, it is a general purpose parallel computing architecture, and it’s completely reprogrammable. As Jen-Hsun Hwang, the CEO of NVIDIA likes to point out, GP-GPUs are reprogrammable, while FPGAs are reconfigurable.

Software Taking a Bite out of Hardware

While not a perfect solution yet, it is clear that programmable or configurable hardware has the potential to deliver massive gains in performance (2X or higher) compared to traditional Moore’s Law geometrical scaling (typically 30% speed-up per node). The key to the success of accelerated computing is of course the ability to dynamically change the configuration of the accelerator to meet the demands of a changing workload. It is thus “software” that is enabling the next big advance in computing hardware. Accelerated computing may become the panacea for the increasing complexity and cost and marginal returns of traditional Moore’s Law scaling.

While it remains to be seen how exactly the new developments play out, it is clear that programmable hardware will become a major computing platform in the coming years.

If you liked this essay, please click recommend below so that others may find it too.